The Eval Section - Part #1: Data Collection

The evaluation section of any federal grant can seem like a mountain to climb. I know that for many of the organizational leaders I work with, words like "informed consent" and "quality improvement model" and "data analysis methodology" can sound like a headache.

However, I will tell you that a strong evaluation section is one of the ways you can separate yourself from the field and win that next six- or seven-figure federal grant.

This blog post is the first of a four-part series to help de-mystify the world of data and program evaluation and empower you to write like a data-driven leader in your area of practice. In Part 1, I share strategies for how to collect data in the real-world, applied settings. Topics include:

How to write strong performance measures and link them to outcomes

How to build a plan for data collection

What tools you can use to simplify your data collection processes

How to tie it all together with a performance measures table

On the go? Listen to the podcast episode that discusses this article: Writing Performance Measures

The first step of your data collection process should be to carefully look at each of your performance measures - usually expressed as goals and outcomes. These should be written using the SMART (specific, measurable, achievable, realistic, and time-bound) framework and should focus on outcomes - what it is you hope to achieve. I like to ask the question, "What will the people we serve be able to do as a result of the programs and services in this grant?" to help jumpstart this conversation.

From a data collection perspective, your task is to design an approach that will allow you to demonstrate change between the start and completion of your grant. At the start, we use the term "benchmarks" to identify data points that you have already collected and may be an indicator of the problem or challenge you're trying to change.

Say, for example, that you want to reduce youth use of nicotine vapes. From a recent survey in your community - or using data like the YRBS - you've identified that 15% of youth in grades 6-12 report using nicotine vapes in the past 30 days. A great performance measure would be:

"Reduce youth nicotine vape use from 15% to 13% by the end of the grant period as measured on our community survey."

Not all performance measure have to be focused on long-term outcomes, like 30 day use. You can also write some that focus on what you will have accomplished during the grant period. For example:

"By June 30, 2025, project staff will have trained 75 health educators on how to incorporate evidence-based curricula to address nicotine vape use in 9th grade health classes."

In each case, you have something to measure that will show change between when you started and when you finished.

Building a Plan for Data Collection

So once you have your performance measures, how do you collect the data? Here, you will outline the who, when, where, and how of data collection. There is SO much on this topic that is unique to each project, but I'll outline a few approaches that I see successful grant applications take to handle data collection.

Identify dedicated people to be in charge of data collection. This could be a staff member or contractor, but the dedicated person should have data collection as one of their primary roles. This signals to your team that you have a person who will take ownership for data collection and be responsible for the planning, implementation, and reporting of your data and outcomes.

Build in regular processes for collecting data. This is one of the areas many people really struggle. When and how should you collect data? While there are so many ways to approach this, a good rule of thumb is to build in data collection whenever you are already interacting with the people you serve. Good examples of this are:

During program enrollment and service intake sessions, while participants are completing consent forms and other critical documentation.

Immediately before or after program participants engage in a training, service, or activity.

You will have much greater success collecting data if you ask people when you're already interacting with them. Stand-alone data collection - like blind-emailing everyone independently from a program or service - is much less likely to receive high response rates.

Imagine each data collection process from the perspective of the participant. Think through exactly how you will collect the data. Here are some questions to help think through this process:

How will you ask people to participate, and include opportunities for informed consent?

Where will program participants complete your forms and surveys, or participate in your interviews and focus groups?

Which members of your team are best equipped to lead data collection, and how will you train them?

What can you do to ensure that your data collection processes are responsive to the cultural norms and expectations of program participants?

Budget for incentives. People are being asked constantly to complete surveys and provide feedback - and most will find it a tax on their precious time. Build into your budget resources to dedicate to gift cards or other incentives that can help give your program participants some compensation for taking the time to help you meet data collection requirements. Consider also increasing the amount based on the amount of time you're asking people to give to complete data collection.

As a rule of thumb, think of $0.50-$1.00 per minute of someone's time. A 15-20 minute survey should probably include a $15-$20 gift card. A 50 minute focus group or interview should include a $25-$50 gift card. Check grant requirements, as some have a cap on the amount for incentives you can offer.

Be realistic. Sampling strategies are important as you want the data you actually collect to reflect the people you have served. I've seen many grant applications that overpromise on how many people they think will complete their surveys and attend their programs. As a back-of-the-napkin rule of thumb, assume that your data collection response rates will look something like like the following:

60%-75% response rates from surveys and forms administered at the beginning and end of your program (assuming low attrition rates)

10%-20% response rates from stand-alone forms and surveys with incentives to a community who is familiar with your work

0%-5% response rates from stand-alone forms and surveys with no incentives

Identifying Data Collection Tools

Quality survey and data tools are essential to making your data collection project simple. Here are a few of my favorites and how they might simplify your work:

Google Forms. This is the simple, free, and easy to access tool that everyone loves to use. For smaller scale projects with limited privacy and security needs, this can be a simple go-to solution to help you gather survey and other forms-based means of data collection.

Zoho Survey. I really like Zoho Survey for data collection work if you need something a little more robust. I particularly like that you can brand/white-label your surveys, develop more complex skip logic and piping, set up trigger emails once forms are completed, and do offline data collection. It also has some more enhanced options for data privacy and security that may be important for your project. Comparable tools include Jotform and Qualtrics, among many others.

Google Sheets / Excel. So much can be done with more advanced uses of traditional spreadsheet tools. I like to have one or more sheets dedicated to house "raw" data while using one or more other sheets for dash-boarding. Tools like the pivot table and formulas like the amazing VLOOKUP can help you take data from your surveys and forms and represent them in ways you need for grant reporting requirements. For more advanced dash-boarding, take a look at Tableau and Power BI.

Zapier. One of the areas where data collection can take a lot of time is in exporting, copy-pasting, and otherwise moving your data around. Zapier can automate so much of this - it's the ultimate connector tool to help you integrate one tool with another. I like to use it to connect forms and survey tools to spreadsheets, though there are many other ways it can be used. Double-check with your privacy and security requirements to ensure that you are in compliance prior to use.

Case management tools. For more complex projects that require repeated interactions with program participants, you may want to look into case management tools. Social Solution's Apricot is a good example for those working in human services, and SimplePractice is great for clinical mental health settings. Know, however, that implementing a tool like this requires a lot of work and organizational buy-in - so budget extra time for implementation and rollout.

Always take into consideration the data and security requirements of your program’s unique needs. Not all tools will have sufficient security protocols for all projects - and when in doubt, consult with a technical or legal expert to help you determine which tools may be a good fit for your needs.

Tying it All Together

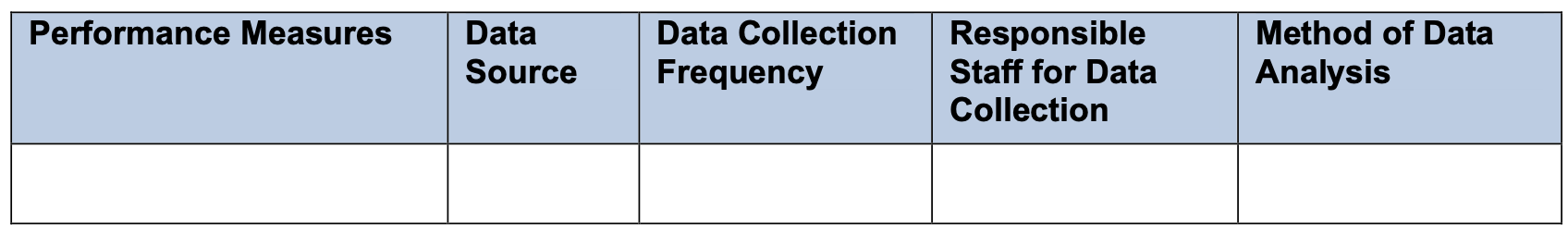

Once you have your performance measures written and you've answered the how-to questions of who, when, and where data are to be collected, it's time to organize it into a table. Most federal grants in the health/human services arena have some kind of table that links your performance measures to the means by which you will collect and report on data for those performance measures.

Performance measures reporting template as seen in SAMHSA's FY24 Partnerships for Success Grant

This table is so important to the success of this section, as it spells out exactly how all of the logistical questions of data collection will be answered for each of your identified performance measures. Note that this is also how you can show how closely your proposal is aligned across the problem statement, proposed activities, budget, and evaluation sections. I strongly recommend you complete this section as a team, as collecting data on each performance measure will require the input and buy-in of multiple team members to make it happen.

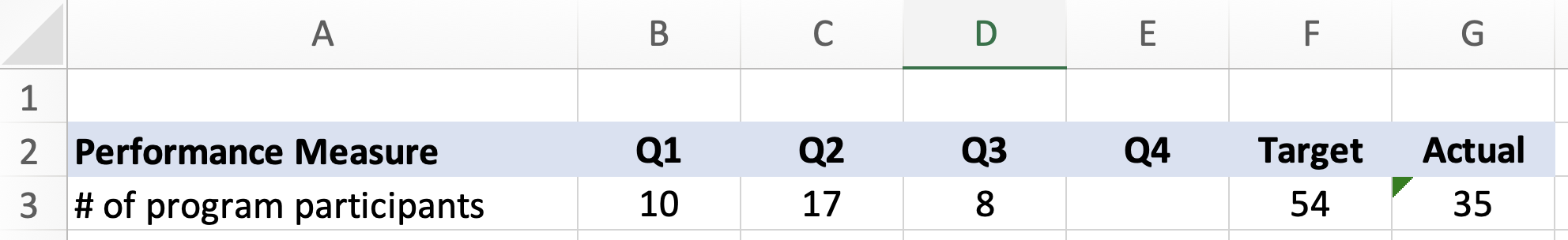

I really like using this section to also model the reporting I do for the clients I work with. For example, you can create a spreadsheet that lists out every performance measure and include cells next to each one with the reporting time frame (often quarterly or monthly). Seeing how the data are entered over time allows you to compare progress over different time periods in the grant and helps you understand where you may be on track, exceeding, or behind in meeting your performance measure targets. For example:

Example Reporting Spreadsheet

And that's it! Data collection can seem tricky, but with a little thoughtful planning, it's manageable - and can be the one thing that distinguishes you from competitors in grant writing.

Up Next

This article is the first of a four-part series on writing the evaluation section of your federal grant proposals. Upcoming topics include:

Part #2: Confidentiality, Privacy, and Informed Consent

Part #3: Data Analysis

Part #4: Reporting, Communication, Continuous Improvement

Ready to get started?

Looking for some external expertise? I've worked with organizations to apply for local, state, and federal grants to win multi-year awards. Reach out to learn more about how you can improve your grant writing with an excellent evaluation section to win your next federal grant.

—

Note: this blog post is for informational purposes only does not, and is not intended to, constitute legal or medical advice. Readers of this blog post should contact their attorney to obtain advice with respect to any particular legal matter.